Key Takeaways

- True on-device assistants like Gemini Nano are revolutionizing the smartphone experience.

- Specialized LLMs are the future, offering tailored knowledge for specific tasks or industries.

- Improved smart home integration with LLMs can lead to more intuitive commands and seamless automation.

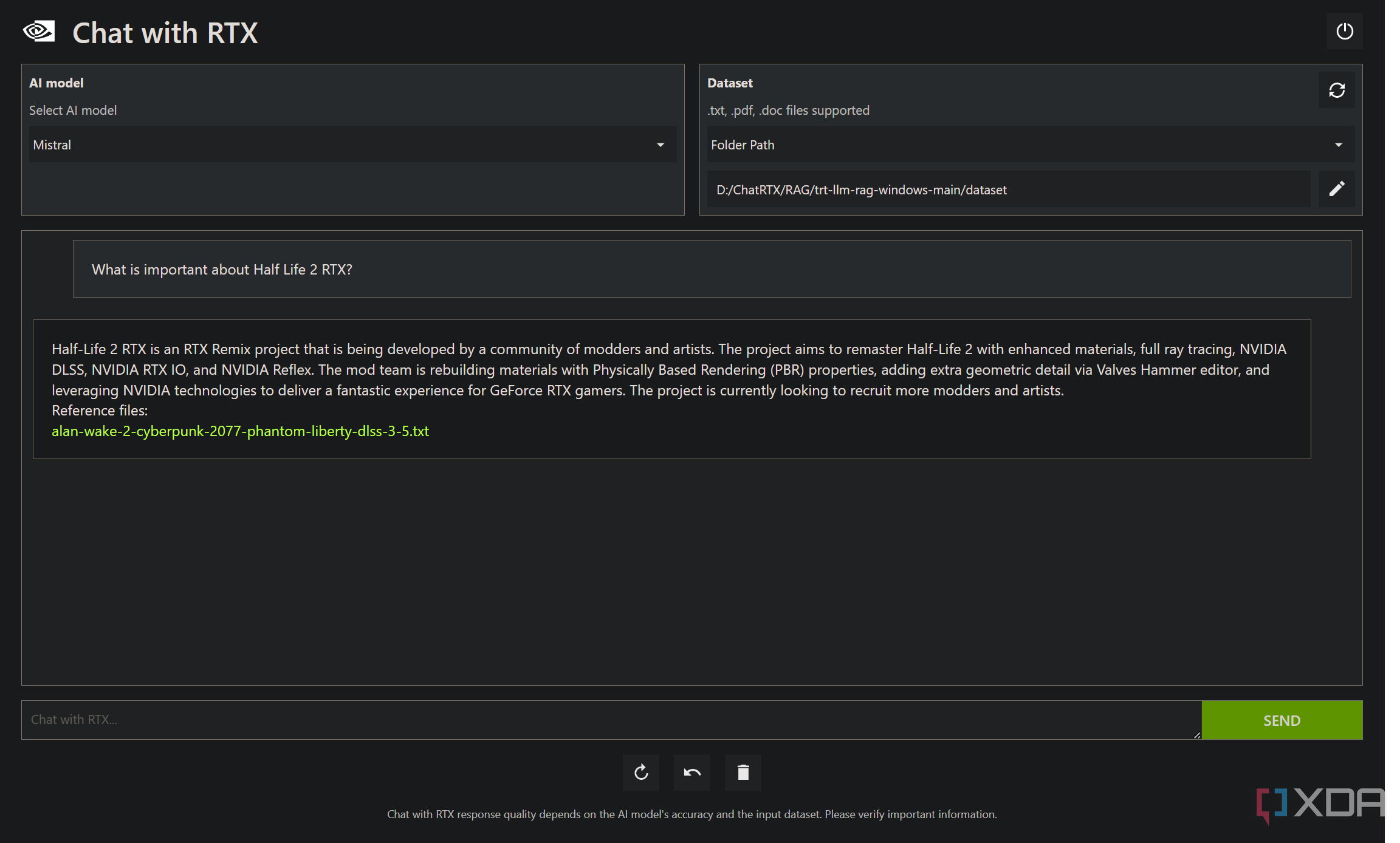

The world of large language models has been slowly ticking along with new improvements and changes over time. We’ve seen iterations of GPT-4 come as OpenAI continues to improve the model, and advancements like Retrieval-Augmented Generation (RAG) have started to power local solutions like Chat with RTX. There’s a lot more that could be happening in the world of language models though, and these are the things that we’d love to see next.

ChatGPT vs Microsoft Copilot vs Google Gemini: What are the differences?

If you’ve been trying to figure out which generative AI tool is better, you’ve come to the right place

1 True on-device assistants

Improve on Siri and the Google Assistant

On-device assistants have been a thing for years now, but they tend to be pretty basic and utilize the cloud to provide their best features. LLMs like Gemini Nano can run on-device, and it’s currently being tested on the likes of the Google Pixel 8 Pro and the Samsung Galaxy S24. These are true on-device assistants that we first envisioned with smartphones years and years ago, and can make our smartphones more powerful than ever.

These assistants, in the future, should hopefully be able to control your device for you too, though there may be safeguards needed to ensure that they work appropriately. Being able to ask an on-device assistant to enable dark mode, connect to a Wi-Fi network, or install an app for you all sounds great, but that can also be abused. There’s a lot of work needed in this area, but there’s so much potential, too.

2 Training for specific doctrines

LLMs built for specific purposes

This is already happening, but I think that LLMs trained for specific purposes are the future of large language models. For example, a model trained on Python code (and only Python code) will be a massive help to anyone who needs help with programming in Python. Additional help from a larger corpus of data does go a long way too, but by and large the focused training of a model on specific topics will be a boon for fighting against hallucinations and other problems.

LLMs like these are also great for big companies with a large amount of training documentation, and this goes back to RAG, too. With a huge amount of documentation and a relatively underpowered LLM, an LLM can still grab the relevant information from a set of documents. An internal employee helper system is the exact kind of situation where this would work well, as the company could have an internal helper that can answer employee questions while also not risking the leaking of data.

3 Better smart home integration

Hey Google, turn on the lights

As it stands, there are quite a few smart home integrations that Alexa and the Google Assistant already have, but the commands are very rigid. There’s no conversational element, and sometimes, they can struggle with things depending on the way that they’re said. I don’t mean accents either, I mean something like a turn of phrase. Hiberno-English, the dialect of English that we speak in Ireland, has a ton of idioms that are direct translations from Irish. While Alexa has gotten better with these, it can sometimes still struggle. An LLM trained on a wide corpus of data, however, would typically understand what these mean.

To that end, being able to say something like “Hey Google, when I get home, please turn on the lights and set the thermostat to 70 degrees Fahrenheit” is something that I’m sure many people wish they could just say to their phone with confidence that it will work. These are the use cases that I think LLMs can really benefit users in, but it needs to be done correctly.

GPT-4 with its code interpreter is the first step

I would love for an LLM to be properly capable of making use of tools. Give it a calculator, research assistants, an IDE, anything that it can use to make it a better service. As it stands, asking GPT-4 to calculate something will see it write a Python program to compute the answer, which is certainly a step in the right direction. For contrast, Google’s Gemini Advanced and Copilot won’t do that, instead guessing the answer based on what’s closest in their knowledge bases.

All of that is to say that giving LLMs tools will make them a lot more powerful very quickly, and I think that’s exactly what OpenAI is working on currently. ChatGPT Plus with GPT-4 is already doing a great job in this department, and I want to see it do even better.

I tried to make Minecraft with ChatGPT

I tried to use ChatGPT to make a basic clone of Minecraft in Unity, and the results were… interesting

The future of LLMs is bright

If you’re an AI enthusiast, then the advancements in LLMs have to be exciting. With GPT-5 potentially on the horizon and arriving this summer, Altman has said that GPT-4 “sucks” in comparison. That’s got to get you excited. With GPT-4 demonstrating tool use as well through the code interpreter and on-device assistants beginning to roll out, I suspect that LLMs will soon become better tools in the near future than they ever have been before.

[ad_2]