Key Takeaways

- Chips are crucial for AI performance in devices and cloud servers, enabling faster compute speeds and supporting more demanding workflows.

- Major tech companies like Nvidia, Intel, AMD, Google, Meta, and Microsoft are all competing in AI chipmaking.

- Success in AI software depends on developing advanced AI chips, and Nvidia’s lead over everyone else shows there is financial incentive for chipmaking too.

It’s easy to see the consumer-level applications for artificial intelligence in 2024, such as software tools like OpenAI’s ChatGPT or hardware products like Humane’s AI Pin. However, these are just the tip of the iceberg when it comes to AI development. Whether you’re looking at AI-based software or hardware, their success can all be traced back to chipmaking. Some of these products and services leverage on-device AI processing, and others offload the processing requirements to cloud servers. Either way, custom silicon is the backbone of AI, and that’s why every major tech company is engaging in a behind-the-scenes race to make the best AI processors for great AI PCs and cloud servers alike.

What is an AI PC and is it worth the hype?

Explore the world of AI PCs, and learn whether all the hype is justified.

Why chips are crucial to AI performance

They make AI applications possible, whether they’re on-device or in the cloud

Smartphones and laptops are starting to include neural processing units (NPUs) designed to specifically manage AI-related tasks. Compared to a traditional CPU or GPU, an NPU can handle performing functions and analyzing information in parallel much better. So, while a GPU might be able to process a query that requires deep or machine learning, an NPU accelerates things. Using an NPU compared to other processor types will not only allow for faster AI computation, but will also enable more demanding workflows. That’s why Apple has shipped systems-on-a-chip with Neural Engines for years on both smartphones and mobile devices. NPUs have become a big part of PC hardware lately, too. The two biggest names in consumer-grade processing — Intel and AMD — have both incorporated NPUs into their chips.

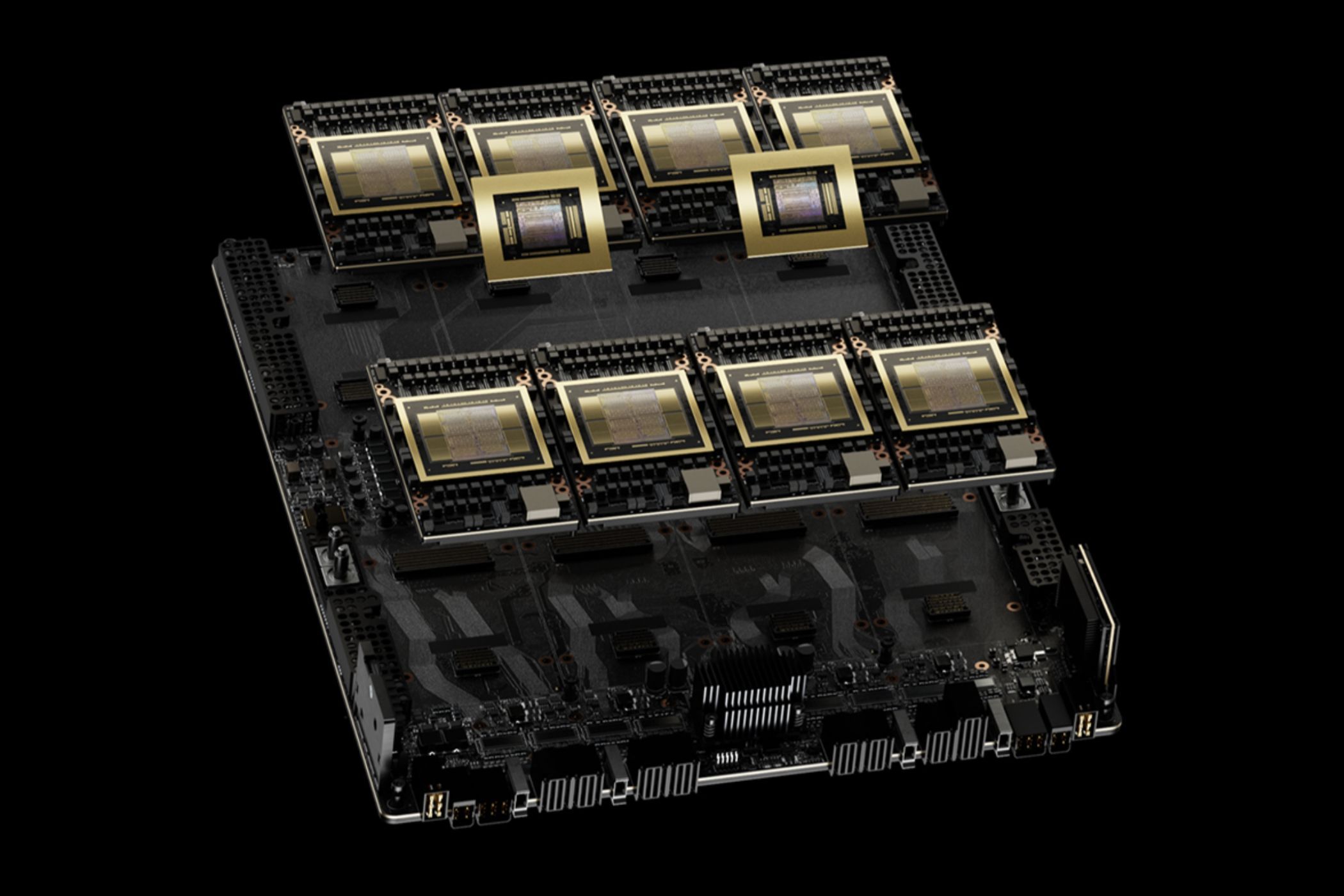

However, NPUs only help with running AI features on-device. Most AI-based software tools and features today run off-device, shifting the processing requirements to a cloud server somewhere else. For example, if you ask an AI chatbot like ChatGPT, Copilot, or Gemini a question, it’ll send that request to a server and process it. But it’s important to remember that AI processors are needed to power the servers in data processing centers, too. In fact, the advanced AI processors used to fill up server racks in data centers are much more in demand than consumer-grade chips. Nvidia is the industry-leader in AI processors currently, and its H100 GPUs specialized for deep learning and AI tasks are hard to find, going for over $40,000 apiece on the secondhand market.

Nvidia HGX vs DGX: What are the differences?

How Nvidia’s enterprise GPU solutions for high-performance AI computing differ

As a result, every player in the tech industry is taking note of what Nvidia is doing with its advanced GPUs, including the aforementioned H100 and the newly-announced H200. These chips and the cards containing them are crucial to running the large language models (LLMs) that support many generative AI features. The obvious ones are chatbots, but there are also image generators, video generators, and more. The companies creating these software features — like Google, Microsoft, OpenAI, and others — all need advanced AI chips to power their offerings. So, instead of just racing to build the best AI software tools, they’re also competing to design and acquire the chips that will bring them to life.

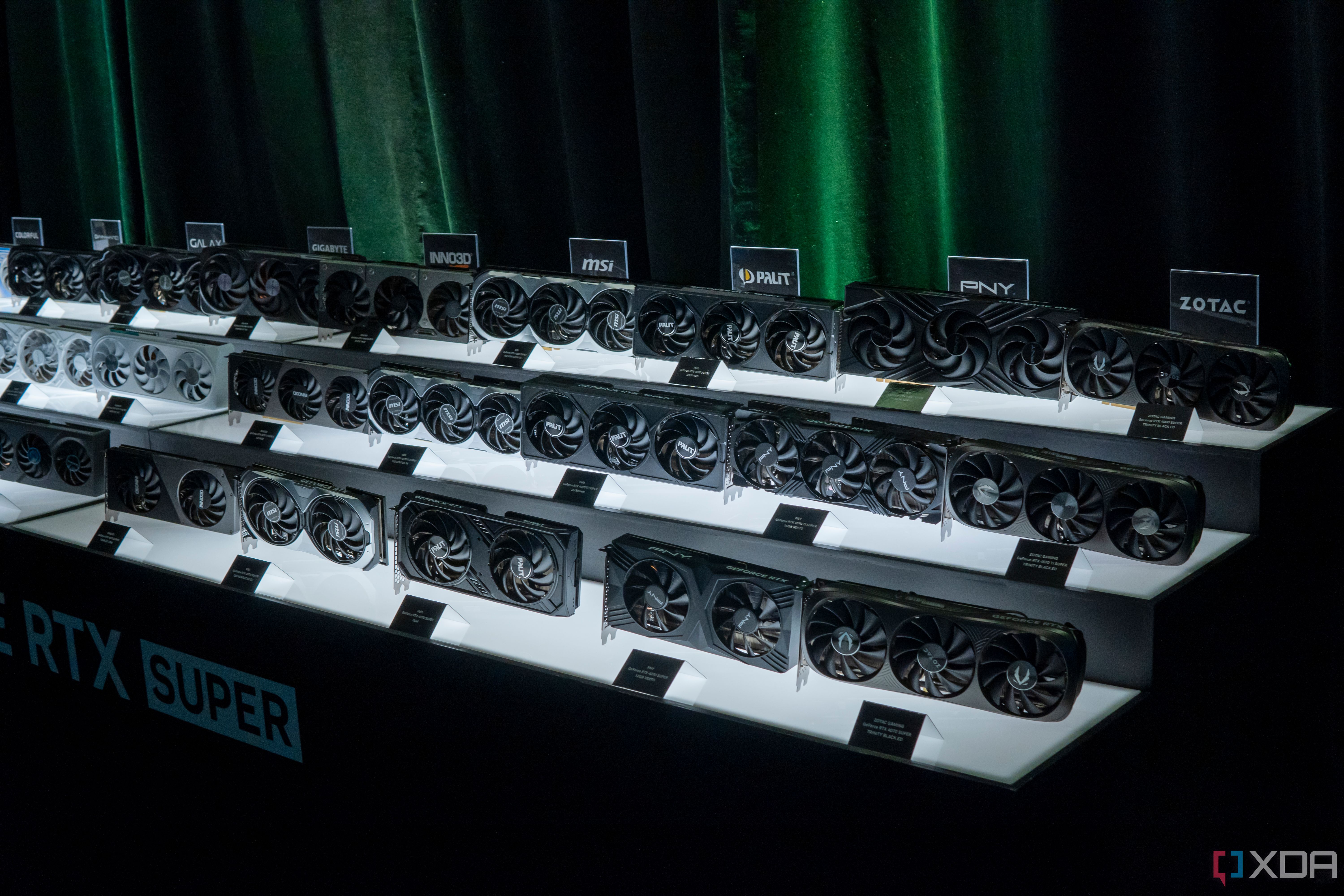

Everyone wants a piece of AI chip creation

Nvidia, Intel, AMD, Google, Meta, and Microsoft are all chasing it

That’s why, if you pick out the name of a random major tech company, it is likely involved in AI chipmaking, designing, or fabrication in some form or another. Google announced last week a new type of processor called Google Axion, which is a custom Arm-based chip designed for data centers that support Google Cloud. Microsoft has two AI processors coming this year, Azure Maia and Azure Cobalt, which could be a way to avoid a reliance on Nvidia components. Meta broke into the chipmaking space with the Meta Training and Inference Accelerator (MTIA) last year, and also previewed a next-generation offering last week. Amazon Web Services has a new custom Trainium2 processor for AI-based tasks, while also using Nvidia chips for its data centers. OpenAI’s CEO Sam Altman is reportedly looking into custom AI processors, too.

Right now, everyone needs Nvidia’s H100 GPU for AI processing to provide cloud services. Amazon Web Services, Microsoft Azure, and Google Cloud are three of the biggest cloud processing providers today. They all depend on Nvidia to provide AI, machine, and deep learning computation for their customers. Meta sneakily plans to own 340,000 Nvidia H100 GPUs by the end of 2024, according to CEO Mark Zuckerburg, making the company a subtle contender as well. However, while they’re reliant on Nvidia for now — and have heavily stressed their continued relationships with Nvidia as to not burn any bridges — that might not be the case forever. Amazon, Microsoft, Google, and Meta are all building their own alternatives, and the company that successfully rivals Nvidia could be the one to win the AI race.

There’s a ton of money in AI chipmaking

Nvidia is winning the race so far, and stock prices are surging

So, it’s easy to see that companies are facing off against each other to develop better software features and LLMs. However, it’s less apparent from the surface that Microsoft, Google, Amazon, and Meta need to beat each other in hardware to also win with software. Those two aspects of AI are tied together, at least as the market currently stands. Nvidia is the king of AI, and every other company’s success depends on getting access to Nvidia GPUs. There’s financial incentive here, too. At the time of writing, Nvidia’s stock prices have increased 218.50% over the past year, and it became a trillion-dollar company due to its dominance in AI chipmaking. If there’s anything we’ve learned over the course of the latest AI boom, it’s that tech companies will likely outlast each other if they can successfully make their own silicon.

Nvidia is taking the crown in the AI hardware scene

The AI hardware battle is heating up, and Nvidia is leading the pack right now

[ad_2]